Hey everyone! Today, I’m excited to share my progress on a falling sand game powered by neural networks. Let’s dive into the evolution of this project and the fascinating challenges I’ve encountered along the way.

Version Zero: Stable Diffusion Inspiration

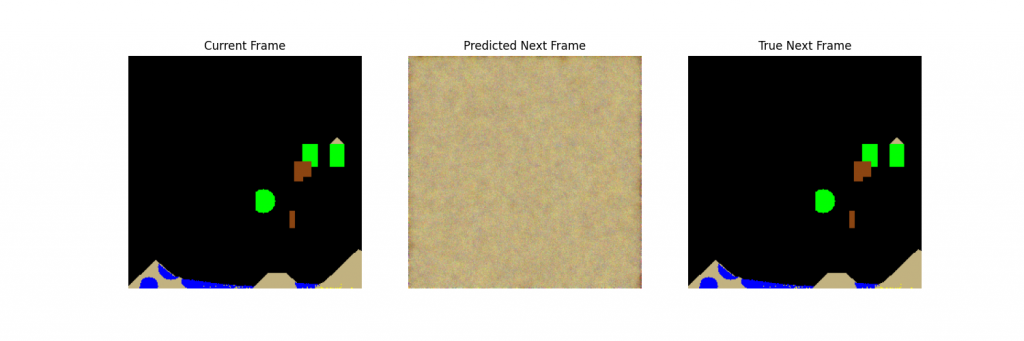

Our journey begins with an ambitious idea inspired by a paper about using stable diffusion to run Doom. Intrigued, I thought, “Why not apply this to a falling sand game?” Armed with my trusty RTX 3090, I set out to make it happen.

Well, folks, let’s just say the results were… interesting. While I’m sure there’s a way to coax something meaningful out of this approach, doing so with 24GB of VRAM proved to be quite the challenge.

Version One: The Promise of CNNs

Undeterred, I decided to pivot to a more tailored approach. Enter our first Convolutional Neural Network (CNN) model:

class CellularAutomatonCNN(nn.Module):

def __init__(self):

super(CellularAutomatonCNN, self).__init__()

self.conv1 = nn.Conv2d(7, 64, kernel_size=3, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm2d(128)

self.conv3 = nn.Conv2d(128, 64, kernel_size=3, padding=1)

self.bn3 = nn.BatchNorm2d(64)

self.conv4 = nn.Conv2d(64, 7, kernel_size=3, padding=1)This model uses four convolutional layers with batch normalization, gradually increasing then decreasing the number of channels. It takes in 7 channels (one for each element type) and outputs predictions for the next state of each cell.

This version showed great promise! We had a basic set of elements – sand, water, plant, wood, acid, and fire – interacting in somewhat realistic ways. However, it wasn’t without its quirks. Liquids were a bit… chunky, shall we say?

Version Two: Expanding Horizons

Encouraged by our initial success, we decided to up the ante. Enter the ImprovedSandModel:

class ImprovedSandModel(nn.Module):

def __init__(self, input_channels=14, hidden_size=64):

super(ImprovedSandModel, self).__init__()

self.conv1 = nn.Conv2d(input_channels, hidden_size, kernel_size=5, padding=2)

self.bn1 = nn.BatchNorm2d(hidden_size)

self.conv2 = nn.Conv2d(hidden_size, hidden_size * 2, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm2d(hidden_size * 2)

self.conv3 = nn.Conv2d(hidden_size * 2, hidden_size, kernel_size=3, padding=1)

self.bn3 = nn.BatchNorm2d(hidden_size)

self.conv4 = nn.Conv2d(hidden_size, input_channels, kernel_size=3, padding=1)This model introduced several improvements:

- Increased input channels to 14, allowing for more element types.

- Used a larger initial kernel size (5×5) to capture more context.

- Implemented a bottleneck architecture, expanding then contracting the number of channels.

The results? We hit a smooth 60 FPS and saw improved interactions between elements. However, we still faced some challenges. Time-dependent phenomena like fire didn’t fade as expected, and I noticed a peculiar “waterfall” effect where the tops of element seemed to fall slower than the bottoms.

Version Three: Simplifying for Success and Capturing Time

Sometimes, less is more. Our next iteration, the SimpleSandModel, took this to heart:

class SimpleSandModel(nn.Module):

def __init__(self, input_channels=14, hidden_size=32):

super(SimpleSandModel, self).__init__()

self.conv1 = nn.Conv2d(input_channels, hidden_size, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(hidden_size, hidden_size, kernel_size=3, padding=1)

self.conv3 = nn.Conv2d(hidden_size, input_channels, kernel_size=3, padding=1)This streamlined model maintains the 14 input channels but reduces the complexity:

- Uses only three convolutional layers.

- Keeps a consistent hidden size throughout.

- Removes batch normalization layers.

- Only use training data with lots of movement.

And wow, did it deliver! We’re still cruising at 60 FPS, but now we’re seeing much better behavior from temporary elements like fire. The fire now fades naturally over time, creating a much more realistic effect. Overall, this version feels much more natural and responsive, capturing the dynamic nature of our falling sand world. But we still have water falling issues, and some elements don’t work right at all.

The Road Ahead: Curriculum Learning

Looking to the future, I’m excited to explore curriculum learning. The plan is to gather data for each element individually, then in pairs, and finally all together. We will also pass in 3 frames to try to give the model more time context. During training, we’ll feed these examples progressively, starting with the simplest scenarios. It’s a work in progress, but I have high hopes for this approach!

The Big Picture

The most exciting takeaway from this journey? We’ve essentially created an accelerator for falling sand games. Traditional versions tend to slow down as you add more elements and interactions. Our AI-powered version, on the other hand, maintains a consistent frame rate regardless of complexity. It’s a testament to the power of neural networks in game development!

Closing Thoughts

All the source code for each version is available on GitHub. I encourage you to check it out, play with the models, and see if you can make them even better. Who knows? You might just create the next breakthrough in AI-powered game physics!